Problem / Solution Overview

Over the past centuries, urbanisation has led to an increase in convenience in traveling from places to places. At the same time, there is also an increase in the number of journey planning tools to allow commuters to reach their destination on time based on their own preference through public transportation.

Despite these applications, there are still many heterogeneous uncertain factors unaccounted for in journey planning.

In order to enhance journey planning to better suit to individual needs with higher assurance, this project aims to build a recommender system to deduce the ideal time and journey path to commuters accounting for various heterogeneous uncertainties in the context of Singapore.

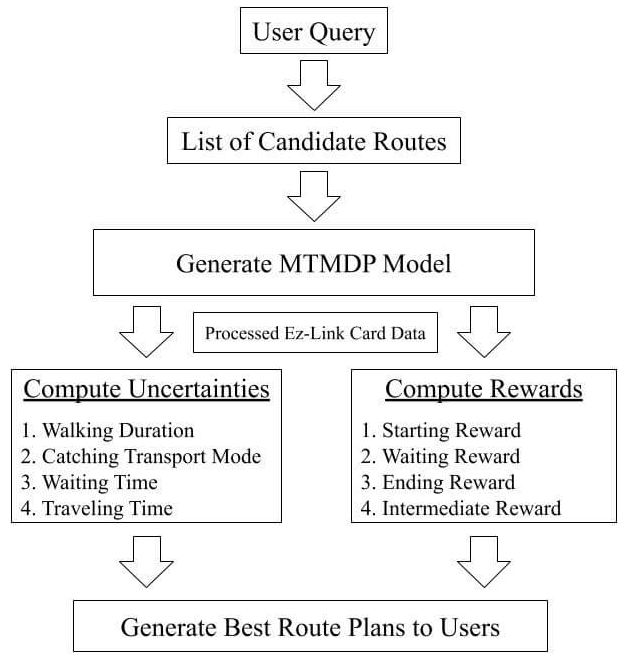

The solution involves generating a Multi-variable Time-dependent Markov Decision Process (MTMDP) model to determine the best route accounting for the factors shown. Ultimately, the best route plan with a certainty index will be shown to each user.

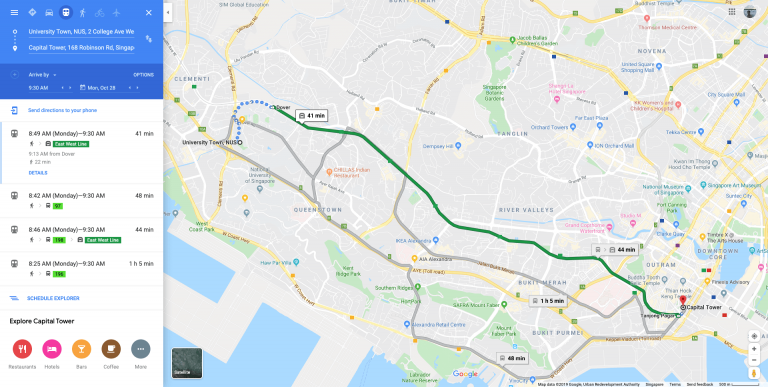

On the left shows a sample result shown from Google map based on the origin, destination and time to reach the final location. While on the right shows the end result from our project where we account for a few additional factors such as crowdedness of the public transport and the rush level of the user. In our final result, we will also generate a certainty index to inform user the likelihood to reach the destination at the shown time if the user follows the plan.

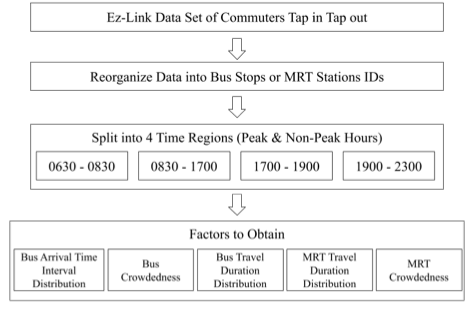

Methodology

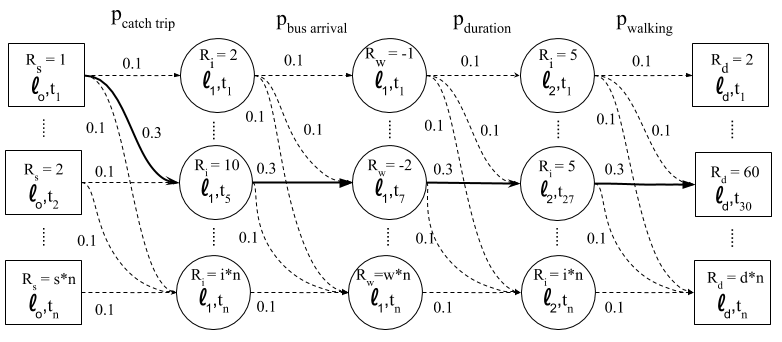

Following the Time-dependent Markov Decision Process (TMDP) (Boyan and Littman, 2001), we will model the journey planning problem into discrete states, actions, rewards and objectives.

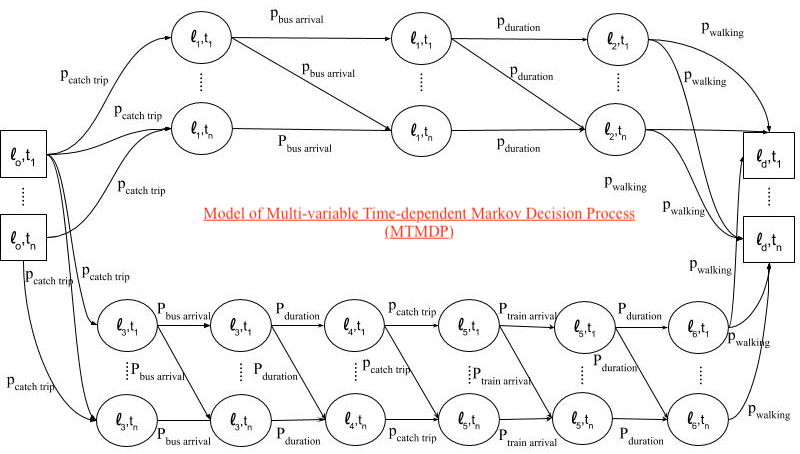

As shown above, given the <li, ti> where lo and ld refers to any location in Singapore while li refers to the location at a bus stop or train station and ti refers to the time in which the user reaches the location. As a result, with a 1 minute temporal dimension defined, each state is multiplied by n times where n is the maximum time taken to complete the entire journey.

At the same time, each edge corresponds to a probability for the user to reach from one state to another state given a period of time. The types of probabilities can be based on the time taken to complete each action such as catching the planned trip, bus arrival, bus duration and walking.

Due to the complexity of the previous model, we will zoom in on a real example to illustrate the concept where the user’s starting position as lo and destination as ld. According to the diagram above, it recommends the user to leave at lot1. the user will have a 0.3 probability of catching the bus at l1t5. Then there is a 0.3 probability for the user to wait for 2 minutes (t7–t5) for the bus to arrive. Upon boarding the bus, the user has a 0,3 probability to reach l2 in 20 minutes (t27–t7). Finally, the user has a 0.3 probability of walking to reach ld in 3 minutes (t30–t27).

For specific details on how each probability and reward is determined, refer to the final report linked at the end of the post.

Results

Based on the MTMDP implementation proposed, a single test setup is conducted based on a specific origin and destination (more information in the final report at the end of the post). On top of that, we ran simulations of random locations for route planned based on 4 different models, namely Dijsktra, Google Maps, MTMDP and MTMDP with a focus on crowdedness, during both peak and non-peak hours. The result is as shown.

Observations noted:

- Despite lower bus frequency during non-peak period, the estimated duration focusing on traveling time to reach the destination on peak hours are always longer than non-peak hours.

- MTMDP with crowdedness recommended a route which takes a longer traveling time by suggesting to the user to leave earlier. When examined in more details, the user will be able to board a bus with standing available instead of overly crowded.

- The proposed path’s traveling time by MTMDP is similar to that of Google Maps, except that there is an additional information which states the certainty of time taken for this path.

Lastly, more interesting effects may be observed if the experiment is conducted on a wider scale.

Limitations observed

- The reward function introduced for each of the states are arbitrarily determined based on our understanding of commuters in Singapore and not supported by concrete evidence.

- The training data used to train the model is based on historical data in 2016 and we did not account for weekends data.

- The final uncertainty index is determined by using the mean of the probability across each step. We are unable to average the probabilities as it may be too low to substantiate the effectiveness. A better approach would be to conduct real-life testing and calibrate the result accordingly.

References

- Boyan, J. A., & Littman, M. L. (2001). Exact solutions to time-dependent MDPs. In Advances in Neural Information Processing Systems (pp. 1026-1032).