Overview

As medical specialists have to meet overwhelming number of patients daily, where the process is both tedious and time-consuming. In addition, each medical report is demanding to write and possibly too technical for patients to understand.

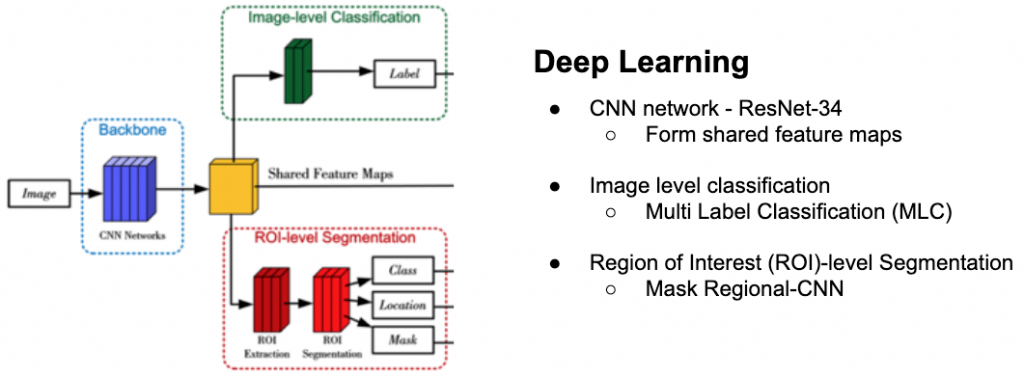

Hence, with an overview of the deep learning framework on the left, this project aims to build a system to automate the process of disease detection and report generation.

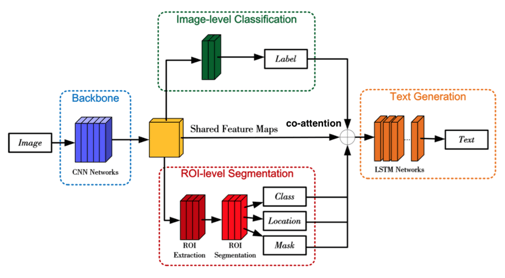

Image detection framework

Upon receiving the initial image, it will be sent into ResNet-34 of the Convolutional Neural Network (CNN). An array of features will be determined from the CNN layer output. Based on the features, it will be sent both to the Multi Label Classification layer (Tsoumakas & Katakis, 2007) and Region of Interest (ROI)-level segmentation (He et al, 2017).

The MLC layer will generate an output probability fo the corresponding label of each of the shared features. The top 10 (customisable) highest probability tags will be used later for classification.

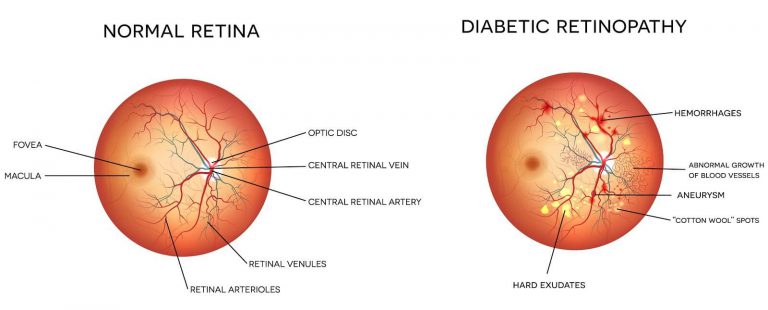

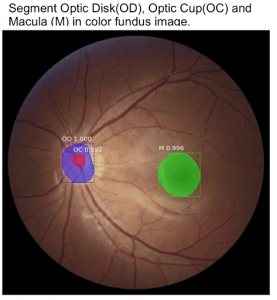

The ROI-level segmentation will focus on localising each of the main features of the image and apply a binary mask over the features. Based on the mask, a confidence score will be generated for each localised feature as shown below.

Report generation framework

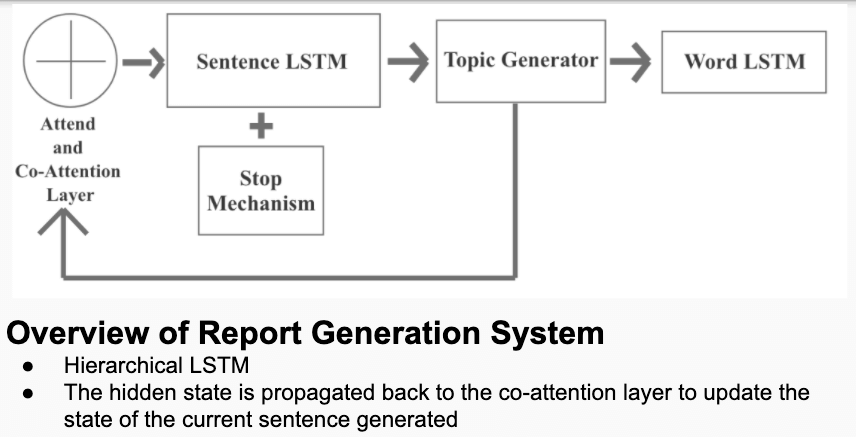

Based on the image features and tags detected, the attend and co-attention layer is used to combine both visual and semantic attention to enable more accurate level of report generation.

The report generation is based on a single Long Short Term Memory (LSTM) layer which takes the output from the co-attention layer as inputs, generating topic vectors which will be used for the world LSTM. The purpose of having the topic generator is to strengthen the context vector from Sentence LSTM (Pascanu et al, 2013).

Results

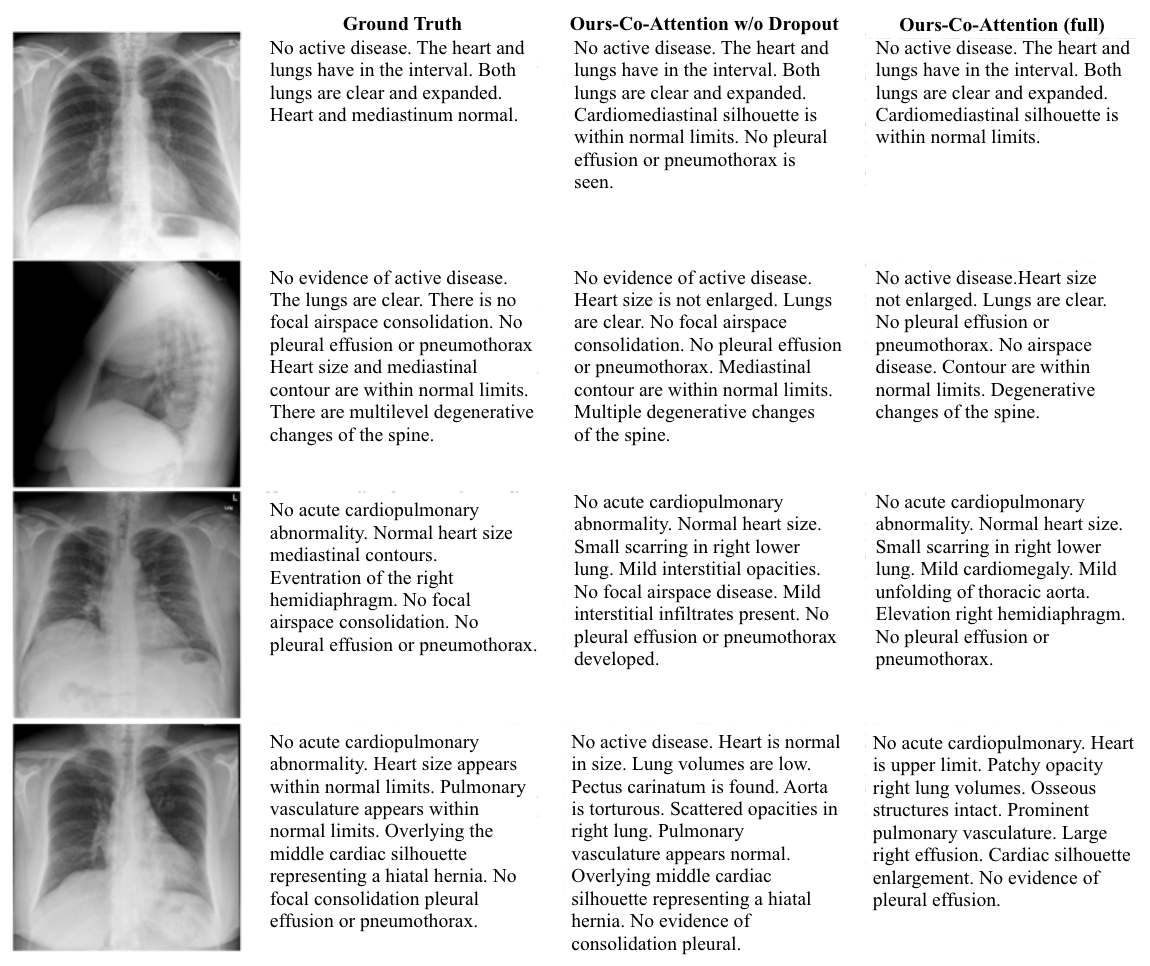

As there is a lack of report data diabetic retinopathy, the research is also extended to chest x ray images where it is harder to detect and a detailed report is required for patients to have a better idea of their condition.

At the same time, the image detection algorithm managed to achieve high accuracy feature detection in a DR detection competition and managed to be published (link below).

Observations noted:

- Generated paragraphs are always more than ground truth in terms of sentences generated

- No active disease is described in many ways E.g.

- “Cardiomediastinal silhouette is within normal limits”

- “No pleural effusion or pneumothorax is seen”

- Most of the generated paragraphs focused on describing normal areas

- A specific sequence is observed:

- High level description

- Detailed description of specific area (e.g. heart, lungs etc.)

It can be seen that different sentences are generated at different time steps focusing on different features of the image and tags used.

Limitations observed

- There is still a lack of understanding of chest conditions as it contains highly technical to do further optimisation.

- The subset of training data of normal chest or retina images outweighs the number of abnormal conditions.

- Technical limitation on further enhancement algorithms such as using ResNet-152 or other feature detection algorithms.

References

- Tsoumakas, G., & Katakis, I. (2007). Multi-label classification: An overview. International Journal of Data Warehousing and Mining (IJDWM), 3(3), 1-13.

- He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Mask r-cnn. In Proceedings of the IEEE international conference on computer vision (pp. 2961-2969).

- Porwal, P., Pachade, S., Kamble, R., Kokare, M., Deshmukh, G., Sahasrabuddhe, V., & Meriaudeau, F. (2018). Indian diabetic retinopathy image dataset (idrid): A database for diabetic retinopathy screening research. Data, 3(3), 25.